“Because we are prisoners of the words we choose, we better pick them carefully”…… Giovanni Sartori (1970).

Introduction

I take my point of departure from Ludwig Wittgenstein, the Austrian-British philosopher, who dealt primarily with the philosophy of language, logic and mathematics, and arguably the most influential philosopher of the 20th century.

In his Magnum Opus, the Tractatus, Wittgenstein concluded that the essence of language is its logical form. The logical structure of language sets the limit to what can be meaningfully said. He then published the Philosophical Investigations, and came to the opposite conclusion, namely that the the essence of language is its use.

For some social scientists and philosophers, the pragmatic-linguistic turn instigated by the philosophical investigations has meant there can be no escaping the hermeneutic circle. There can be no objective reality in social science. All that’s left is interpretation.

A large part of the development of the social and cultural sciences occurs through conflict over words, terms, concepts and definitions. What this suggests is that we need some sort of criteria for what makes a “good concept” or what makes a proposition coherent.

We need criteria to reach shared agreement over the concepts we use. If we leave it open for endless interpretation, all we are left with is a perpetual sophist debate.

For example, take the popular use of the term “structural reform” within European policymaking circles. This is supposedly the core proposition to generate growth in a period of compressed domestic demand (austerity). But what does it mean? Is it a concept that applies across all economies, regardless of their domestic political differences?

Concept stretching

Methodology is the logical structure and public procedure of scientific inquiry. It must be distinguished from technique. Giovanni Sartori, in his famous 1970 article that we’re discussing this week,”concept mis-information”, argues that the over conscious technician is someone who refuses to discuss heat without a thermometer.

As the social world that we study expands, the more we require concepts that are able to travel, in order to compare and contrast, and make sense of that world. But this leads to the problem of conceptual stretching. In the study of comparative politics, the risk of broadening the meaning of our concepts to include more cases – and thereby their range of application – may come at the expense of making our concepts meaningless.

‘Democracy’, ‘globalisation’, ‘populism’, ‘ideology’ and ‘capitalism’ are concepts that have been subject to conceptual stretching in the social sciences. They are concepts that are used to cover a lot variation in the political world. If we assume that one of the defining characteristics of social scientific discourse is precision then this becomes problematic.

In ordinary language use, it is less of a problem. At the same time, social scientists also seek to make “generalizable” claims, and therefore they must use generalisable concepts (with minimal attributes) that can travel to as many cases as possible.

So, the question is how to construct generalise concepts, without concept stretching?

Concepts stand prior to quantification

For Sartori what we gain in extensional coverage, we lose in precision, which is a defining characteristic of “scientific discourse”.

Simultaneously, universal concepts, if they are to be universal at all, must be empirical. That is, we must know it if we see it. They must have real observable attributes.

But what about those abstract concepts with limited empirical referents in the world such as justice or morality? This has non-observable attributes. But even here we surely know justice when we see it (or not). It may not be directly observable in the world but it is an abstract concept with real utility.

The problem of concept formation in the study of comparative politics often emerges from the distinction between differences in kind and differences in degree.

The latter lends itself to measurement (on interval scales) and quantification. The former lends itself to typologies (and ordinal scales). For many quantitative research problems, ambiguity is generally cleared up with better measurements. Whereas in qualitative research, ambiguity is often cleared up with taxonomies and typologies.

Many political science scholars tend to forget, however, that concept formation, even for the data technician, stands prior to quantification.

As Sartori points out, there can be no quantification without conceptualisation.

The process of thinking begins with natural language, and natural language is a fuzzy and messy affair. The logic of either or cannot be replaced with more or less. This is much like the difference between linear algebra and boolean logic.

Criteria for concept formation

We need clear criteria for what makes for a good concept, particularly if that concept shapes the measures and informs the variables we use for data analysis.

The classical approach to concept formation focused on classifications and taxonomies (starting with Aristotle, and directly observable in other sciences such as biology, chemistry and zoology). Classifications remain a central condition for any scientific discourse (think about the periodic table in chemistry, which was published 180 years ago by Dmitri Mendeleev).

But it is important to remember that a concept is something conceived, and leads to a proposition that corresponds to some class of entities in the world.

In the classical tradition we can make a concept more general by lessening its properties or attributes (extension). Conversely we make a concept more specific by the addition of attributes (intension). In this tradition, as we reduce the intension of a concept (attributes), in order to apply the concept to more cases, we move up the ladder of generality.

This can create obvious problems.

For example, we can use the concept ‘capitalism’ as a general term to refer to a certain type of economic regime, and in ordinary language use most people will understand what we are talking about. But in political economy we”add adjectives” to specify what type of market economies we are referring to: liberal, coordinated, social democratic, statist, authoritarian. In turn, these concepts can be used to create a typology, whereby countries and sectors are classified under each of these different types of comparative capitalisms.

Concepts are intimately related to the theories we use.

Concepts are, as Gerring (1999) states, “handmaidens to good theories”. Think about the use of the concept “social class”. This concept is used less and less in social science. Why? Is it because we don’t live in a world of social class divisions? Not really. But because these concepts are associated with a certain “theory” that is less and less popular in the social sciences (Marxism), they tend to fall out of discourse, with the theory.

Should we therefore replace the concepts “social class” with indicators that are easy to operationalise such as education level, income and occupation?

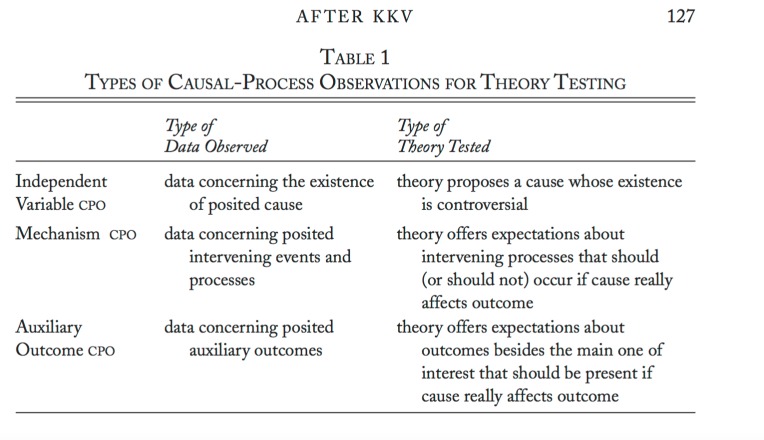

Table 1: Conceptualization and ladders of abstraction

| Levels of abstraction |

Comparative scope and purpose |

Logical and empirical properties |

| HL: High level categories Universal conceptualisations |

Cross area comparisons among heterogeneous contexts (global theory) |

Maximal extension. Minimal intension. Definition by negation |

| ML: Mid level categories General conceptualisations and taxonomies |

Intra area comparisons among relatively homogenous contexts (middle range theory) |

Balanced extension and intension.Definition by analysis |

| LL: Low level categories Configurative conceptualisations |

Case by case analysis (narrow range theory) |

Maximal intension. Minimal extension. Contextual definition |

Extension and intension

Sartori was one of the first comparativists to propose a political science framework for good concept formation in political science. As he states “to compare is to control”.

The comparative method is one of the most powerful tools in political science. But it requires coherent and externally differentiated concepts.

He encouraged scholars to be attentive to context without abandoning broad comparisons (i.e the capacity to generalize). Table 1 suggests that as we move up the ladder of abstraction we increase the extension (set of entities in the world to which the concept refers) and reduce the intension (set of meanings or attributes that define the concept).

There is an inverse relationship between extension and intension.

Think about Weber’s famous typology on “legitimate domination”: traditional, charismatic, legal-rational. Think about the sub-attributes of each type of authority.

He wrote extensively about patrimonial authority, which he classified under traditional. If he only used patrimonial authority it would extend to less cases in the world. ‘Traditional’ authority, however, subsumes patrimonial and extends to more cases.

Rethinking the classical approach

David Collier and James Mahon wrote a very influential article in 1993, as an attempt to re-engage Sartori’s debate on “concept misinformation”. They note that Wittgenstein family resemblance approach to concept formation suggests that members of a category can have a lot in common, but may not have one single attribute that they all share.

Think about the concept ‘mother’. Does this concept require that the mother be the birth-mother? Different attributes can be used as the defining properties of the same category.

They think about the problem of conceptual stretching in terms of primary and secondary categories, rather than subordinate and super ordinate taxonomies. ‘Mother’, ‘capitalism’ and ‘democracy’ are primary categories, birth mother, electoral democracy and liberal market are secondary categories.

Unlike the classical approach the differences are contained within the primary radial category. Conceptual stretching is avoided by adding adjectives.

In classical forms of taxonomical categorization, the problem of conceptual stretching is resolved by dropping an adjective (authoritarianism) whereas in more contemporary usage in political science it is resolved by adding an adjective (bureaucratic authoritarianism).

Extension is gained by adding a secondary category.

A long standing debate in comparative politics is the question which attributes of democracy should we use to differentiate a democratic regime from a non-democratic regime? Elections, party choice, contestation, participation, accountability, protection of civil rights, equal opportunities, rule by the people, and social equity are all contested concepts, and each has specific attributes.

But does every ‘democracy’ have them all?

Clearly, not every democracy has all these attributes but they share a family resemblance.

A researcher may have 7 cases of democratic regime, and each case may have 5 out of six shared attributes, therefore each case is missing a different attribute.

But they all radiate from a core meaning of “rule by the people”.

Defining and conceptualizing democracy is not a simple case of operationalising variables and properties. Conceptualization is a highly contextual process. You have to know your cases. Gerring suggests that all concept formation in the social sciences can be understood as an attempt to mediate the eight criteria above. Let’s briefly discuss each one.

Eight criteria

But how does this all apply to the day to day practice of research design, and ordinary language use? For Gerring (1999), concept formation is an ongoing interpretative battle that involves a set of tradeoffs (as opposed to rules) between eight different criteria:

- Coherence: how internally coherent and externally differentiated are the attributes of the concept?

- Operationalisation: How do we know it when we see it?

- Validity: Are we measuring what we purport to be measuring?

- Field utility: how useful is the concept within a field of closely related terms?

- Resonance: how resonant is the concept in ordinary language/specialised discourse?

- Contextual range: how far can it travel?

- Parsimony: how many attributes does it have?

- Analytic/empirical utility: how useful is it in your research design?

Concept formation ultimately refers to a) the phenomena to be defined, b) the properties or attributes that define them, and c) the label covering both.

He points out that our research is heavily shaped by the concepts we use. For example, the terms “neoliberal” and “ordoliberal” have very different connotations, and attributes, despite the fact that both concepts point to a similar economic philosophy. The same can be said of “globalisation” and “internationalisation”.

The concept ‘ideology’ is said to have 35 different attributes. These means it can effectively have hundreds of different definitions. Should we therefore abandon the concept in favour of a more specific term such as “political belief system”?

It matters a great deal how we define our terms and how we use them in our scientific discourses. Humans are bipedal and featherless (i.e. an observable fact with a clear empirical referent). But this is not what we mean by there term ‘human’?

Definitions, shared meanings, and providing clear indicators of what we are talking about are crucial in social science discourse (and ordinary language use). Concepts perform a referential function. But this is not their only purpose. They also serve to differentiate, define and explicate. The colour blue takes off where the colour green and brown ends.

Familiarity is important. But if a common concept or term serves to confuse a plurality of ideas then the creation of a new concept is often necessary. But this is not an invitation to produce a Derridaesque set of neologisms (yes, I did just create a new term!)

As Gerring points out, on a practical level, effective phrase-making (la casta in recent spanish politics) can be no more separated from the task of concept formation than good writing can be separated from good research.

Concepts, for good or ill, also aspire to power, which are usually captured by their resonance. Good concepts stick because they resonate.

Good concepts do not have endless definitions. Abbreviation shortens discourse and increases understanding. Mathematical and logical language is an obvious example of this. But so is the Chinese language. It is parsimonious.

Parsimonious concepts reduce ambiguity and therefore it is easier for a theory to “grow legs” if it resonates and is parsimonious (i.e. does not have many different attributes).

Coherence suggests that the internal attributes which define the concept belong together. This is arguably the most important criteria for concept formation.

It differentiates blue from green, liberal from conservative. Coherence in the core meaning can make for a very sticky concept, which travels through time and space. Democracy as “rule by the people” is arguably the highest level of coherence in defining democracy.

Internal coherence is inseparable from external differentiation.

But differentiation is always a matter of degree. If you don’t know it when you see it, then you can’t tell it (the concept) from other things. For example what is the difference between power, force, authority and violence? Goof concepts need operationalization.

How can we operationalise a concept such as efficient “administrative reform” in the public sector? Geddes uses the concept “meritocratic recruitment”. This concept is coherent, differentiated and quite parsimonious.

But how theoretically useful is it? Is it the most important aspect of administrative reform?

Typologies

Finally, allow me to say a few words about the central importance of typologies in the process of concept formation. Typologies (think Weber’s typology of authority, or typologies of liberal democracy) serve multiple functions: forming concepts, refining measurement, exploring dimensionality, and organizing explanatory claims.

Conceptual typologies and categorical variables explicate the meaning of a concept by mapping out its dimensions. How should we conceptualize the process of involving civil society in the policymaking? Outside Westminister majoritarian systems this has led to an important set of typologies on corporatism, concertation and pluralism.

How should conceptualise the diversity of capitalisms that exist in market societies?

Discussion – are there are typologies that are directly relevant for organising the cases in your research project? What are the core contested concepts that shape your study?